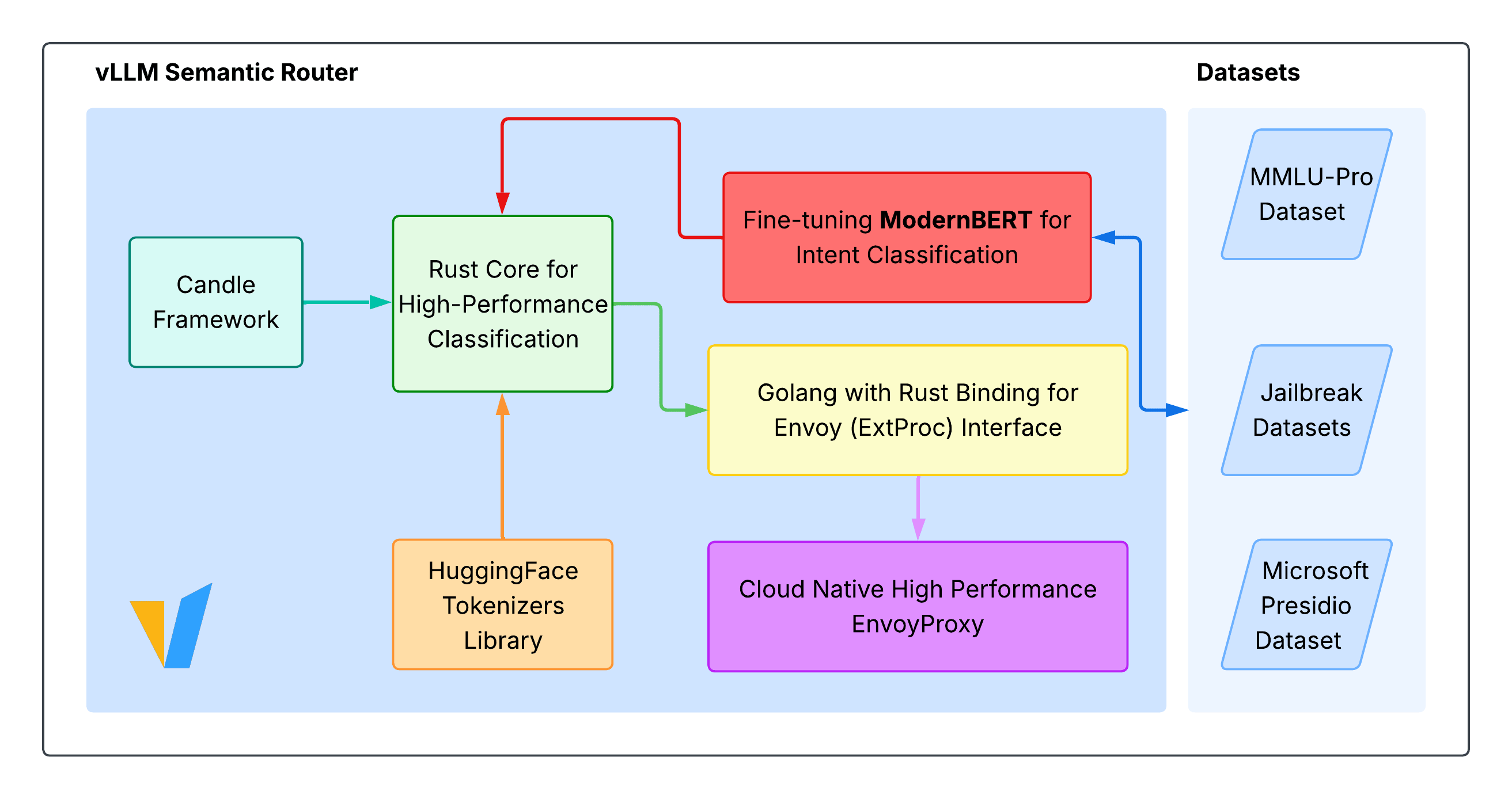

🧠 Neural Processing Architecture

Powered by cutting-edge AI technologies including ModernBERT fine-tuned models, and advanced semantic understanding for intelligent model routing and selection.

🏗️ Intent Aware Semantic Router Architecture

🎥 vLLM Semantic Router Demos

Latest News 🎉: User Experience is something we do care about. Introducing vLLM-SR dashboard:

🚀 Advanced AI Capabilities

Powered by cutting-edge neural networks and machine learning technologies

🧠 Intelligent Routing

Powered by ModernBERT Fine-Tuned Models for intelligent intent understanding, it understands context, intent, and complexity to route requests to the best LLM.

🛡️ AI-Powered Security

Advanced PII Detection and Prompt Guard to identify and block jailbreak attempts, ensuring secure and responsible AI interactions across your infrastructure.

⚡ Semantic Caching

Intelligent Similarity Cache that stores semantic representations of prompts, dramatically reducing token usage and latency through smart content matching.

🤖 Auto-Reasoning Engine

Auto reasoning engine that analyzes request complexity, domain expertise requirements, and performance constraints to automatically select the best model for each task.

🔬 Real-time Analytics

Comprehensive monitoring and analytics dashboard with neural network insights, model performance metrics, and intelligent routing decisions visualization.

🚀 Scalable Architecture

Cloud-native design with distributed neural processing, auto-scaling capabilities, and seamless integration with existing LLM infrastructure and model serving platforms.

Acknowledgements

vLLM Semantic Router is born in open source and built on open source ❤️